Webscraping with ScraPy

Introduction

Hello Everyone!

Today, we will be using ScraPy to create a cool web scraper! For this demonstration, we will be using the IMDB website, and I chose to focus on the movie Love, Rosie. Our goal is to create a scraper that will give us all the actors working on a specified movie, as well as what other movies/tv shows they have appeared on.

For this project, I used a Macbook and Visual Studios, so this tutorial will teach you how to create your web scraper using those!

Here is a link to my repository: https://github.com/k-song14/IMDB_scraper.git

Initializing our project

Let’s begin by going over how to set up our project. The first thing you’ll want to do is to initialize your project. First, set up a new Github repository. Next, you’ll want to open up your terminal and type the following commands:

conda activate PIC16B scrapy startproject IMDB_scraper cd IMDB_scraper

The line ‘conda activate PIC16B’ will give us access to the ScraPy package, since it’s housed within our PIC16B environment. The next line, ‘scrapy startproject IMDB_scraper’ creates our project, and ‘cd IMDB_scraper’ changes our current working directory so that we’ll be working within our project. You’ll see a lot of files within your project, but don’t worry; we won’t be looking at most of the files. In your spiders directory, create a python file called ‘imdb_spider.py’.

Creat your web scraper

Let’s start writing some code! Begin with the following code:

import scrapy

from scrapy.linkextractors import LinkExtractor

class ImdbSpider(scrapy.Spider):

name='imdb_spider'

start_urls = ['https://www.imdb.com/title/tt1638002/']

Within this code block, we start by importing scrapy and creating an ImdbScpider(scrapy.spider) class. As with any other web scraper, you will want to begin by naming your scraper (I named mine ‘imdb_spider’) and giving it a starting url(s). In this case, since I’ll be focusing on the movie Love, Rosie, the url I included is the IMDB page for the movie.

Now, let’s create our parse methods.

Parse Methods

We will have three parse methods: parse(self, response), parse_full_credits(self, response) and parse_actor_page(self.response). I’ll go over how I made each one!

parse(self, response)

Here is the code for this method:

def parse(self,response):

'''parse method; begins at our initial page (imdb for movie)

goes to our desired next page (cast + crew)

'''

# we know that our next page is:

# https://www.imdb.com/title/tt1638002/fullcredits/?ref_=tt_cl_sm

#using inspect and response css to get link for next page

next_page = response.css("a.ipc-metadata-list-item__label.ipc-metadata-list-item__label--link").attrib['href']

#join urls to get next page

next_page = response.urljoin(next_page)

#this gives us the url we want

#once we get to the next page, call on parse_full_credits method

yield scrapy.Request(next_page, callback=self.parse_full_credits)

For our project, this method assumes we are starting on the movie’s IMDB page, and it will help us navigate to the Cast & Crew page. We can get our desired link by using the inspect element and response.css to get the path to the Cast ad Crew page. We then use the command response.urljoin() to get our desired url, then yield a scrapy request. Within our scrapy request, we have our joined url (named next_page) and a callback request (which is what the program should do after navigating to next_page). Our callback request is self.parse_full_credits, which will take us to our next method.

parse_full_credits(self, response)

Here is the code for this method:

def parse_full_credits(self, response):

'''generates and visits sites of all actors in cast and crew

section of our movie

'''

#empty vector to store joint urls

actor_page = []

#list of relative paths for each actor

actors = [a.attrib["href"] for a in response.css("td.primary_photo a")]

#joins url for each relative path in actors; appends to list actor_page

for i in actors: actor_page.append(response.urljoin(i))

#yields request for each url in list

for link in actor_page:

yield scrapy.Request(link, callback=self.parse_actor_page)

This method assumes we are starting on the “next” page (Cast & Crew). We want this method to help us navigate to each of the individual actors’ IMDB pages. To do so, begin by creating an empty list. Next, create a list comprehension to get the relative paths for each actor. We can do so by using the inspect element and using it and response.css (which allows us to go through the html tags) to find the html tags that correspond to our desired goal. In this case, we want the href (link) for each actor. We use response.css(“td.primary_photo a”) because the paths for each actor is located within the table of actors (td = cell) within the html tag a.

Once we get our list of relative paths, we will join them all to our current url (response.url) so that we can navigate to each one. To do so, we will iterate through out list of relative paths and use response.urljoin() to join it to our url. We then append the url to our empty list. We will then navigate to each url by iterating through our new list of urls. Our callback method for this is self.parse_actor_page, which will take us to our next method.

parse_actor_page(self, response)

Here is the code for this method:

def parse_actor_page(self, response):

'''creates dictionary with movies worked on by actor and

corresponding actor name

'''

#extracts name from header

actor_name = response.css("div div table tbody tr td h1 span.itemprop::text").get()

#extracts movies and tv shows, puts them in a list

movie_or_TV_name = response.css("div b a::text").getall()

for name in movie_or_TV_name:

yield{

"actor": actor_name,

"movie_or_TV_name": name

}

Our final method will give us our dictionary of each actor and the movies/tv shows they’ve been involved in. For the actor names, I used the inspect element and response.css().get() to retrieve the text of the headers for each actor’s page (since their name is the header). For the movies/tv shows they’re involved in, I used response.css().getall(). We want to use the getall() because there are multiple; it returns the elements to us in a list. We use a::text, which gives us the text within the a tag. Finally, we will yield our dictionary, which matches the actors’ names with the movies/shows they’ve been involved in. We must iterate through our list of movies/tv shows.

Exporting csv file

Now that your code is done, all you have to do is create / export your csv file! You can do so by first making sure your current working directory is spiders, then typing the following command into your terminal:

scrapy crawl imdb_spider -o results.csv

And you’re done!

Reccomendations

As an extra task, we will be creating a sorted list with the top movies and TV shows that share actors with the ones we got from our web scraper. Here is the code:

import pandas as pd

#import our data: results.csv

df = pd.read_csv("results.csv")

df.head()

#dataframe of top movies with shared actors

df.groupby("movie_or_TV_name").count().sort_values(['actor'], ascending=False).head(10)

With the initial three lines, we import the necessary package, read our csv file into Jupyter notebook and check to see that the import was successful:

import pandas as pd

df = pd.read_csv("results.csv")

df.head()

| actor | movie_or_TV_name | |

|---|---|---|

| 0 | Tom John Kelly | Love, Rosie |

| 1 | Lily Collins | Gilded Rage |

| 2 | Lily Collins | Titan |

| 3 | Lily Collins | Halo of Stars |

| 4 | Lily Collins | Windfall |

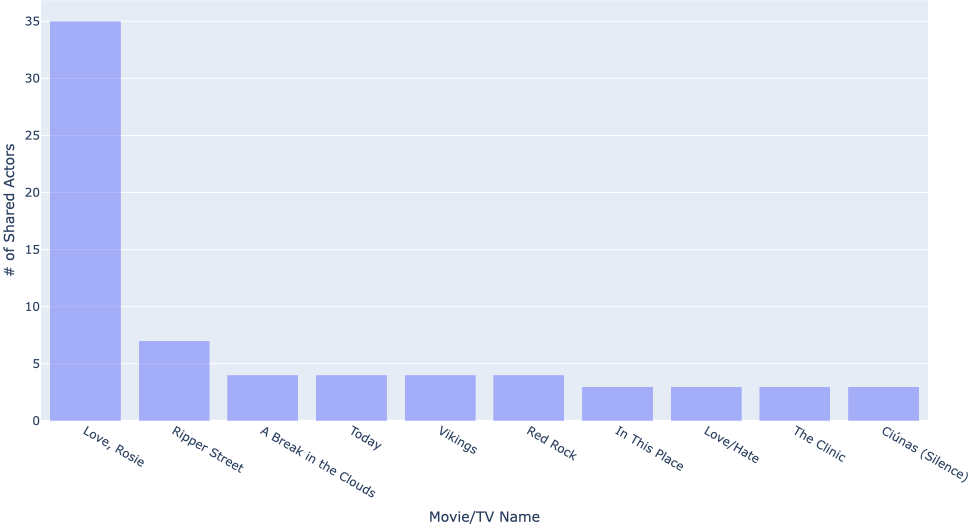

Afterwards, using our last line of code, we get our desired table by grouping our data according to movie_or_TV_show and sorting the values in descending values. Since we just want the top movies with the most shared actors, we’ll just create a table with the first 10 films:

#dataframe of top movies with shared actors

df.groupby("movie_or_TV_name").count().sort_values(['actor'], ascending=False).head(10)

| actor | |

|---|---|

| movie_or_TV_name | |

| Love, Rosie | 35 |

| Ripper Street | 7 |

| A Break in the Clouds | 4 |

| Today | 4 |

| Vikings | 4 |

| Red Rock | 4 |

| In This Place | 3 |

| Love/Hate | 3 |

| The Clinic | 3 |

| Ciúnas (Silence) | 3 |

Now that we have both our csv file and our table of shared actors, let’s create a visualization! I’ll be using plotly to create a histogram with our new dataframe of shared actors. First, I saved the table as a csv file for safekeeping with the following code:

df = pd.read_csv("shared.csv")

Then, I imported plotly and used it to create the following histogram with our shared actors data:

from plotly import express as px

fig = px.histogram(df,

x = "movie_or_TV_name",

y="actor",

opacity=0.5).update_layout(xaxis_title = "Movie/TV Name", # change x-axis label

yaxis_title="# of Shared Actors") # change y-axis label

# reduce whitespace

fig.update_layout(margin={"r":0,"t":0,"l":0,"b":0})

# show the plot

fig.show()

And now we’re done! Thank you so much for reading!